Big data, next-generation phenotyping, and the possibilities for precision medicine

This post is based on a presentation given at the Precision Medicine World Conference. You can skip to the video by clicking here.

As researchers and clinicians expand their investigation of the relationship between phenotypes and genotypes, the importance of accurate phenotypic analysis grows. That means the field needs a uniform understanding of what phenotypes are, how to describe them, and how to assign traits.

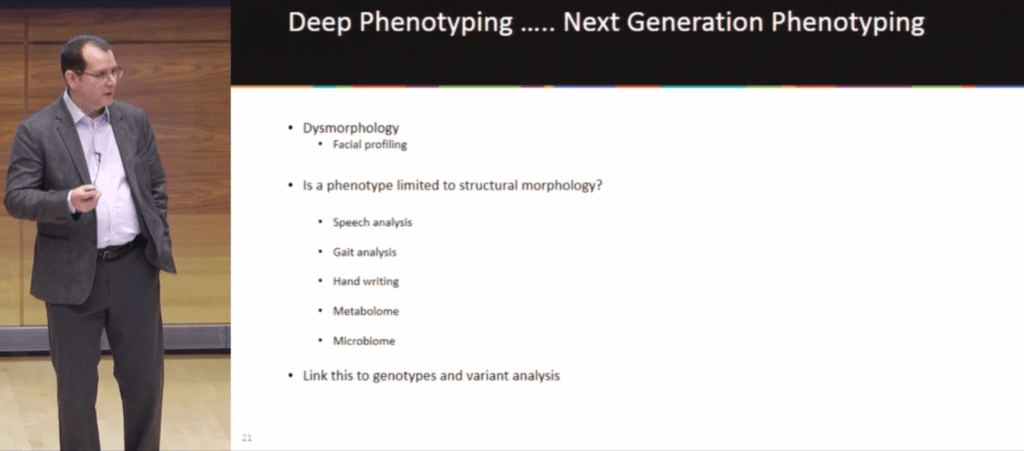

In differentiating deep phenotyping and next-generation phenotyping, Dr. Donald Basel (Medical Director of the Genetics Center at the Children’s Hospital of Wisconsin) asked Precision Medicine World Conference attendees, “Where do we limit our thoughts as to what a phenotype represents? Is it limited to structural morphology or do we consider all aspects of the phenotype?”

Deep phenotyping, which has existed since the 1950s, made a leap forward in dysmorphology when in 2009 NHGRI developed a common language, the hierarchical Human Phenotype Ontology (HPO). Next-generation phenotyping expands that concept, including not just structural morphology but aspects like speech analysis, gait analysis, and all of the “omics”–metabolomics, microbiomics, etc.

Even with the benefits of a common language, clinicians are still faced with the challenge of properly applying the HPO terms. Dr. Basel (also a member of the FDNA Scientific Advisory Board) noted that a tool like Face2Gene, a suite of phenotyping applications, does some of this identification for the clinician, and gave an example of human vs machine analysis of Cornelia de Lange syndrome and phenotypically similar syndromes. A panel of experts identified patients with Cornelia de Lange 77 percent of the time and spotted similar syndromes 87 percent of the time, with a clinical sensitivity of 82 percent and a specificity of 89 percent, whereas FDNA’s DeepGestalt technology correctly selected Cornelia de Lange 94 percent of the time and similar phenotypes 100 percent of the time, with a sensitivity of 86 percent and a specificity of 100 percent.

Of course, as Dr. Basel pointed out when it comes to Cornelia de Lange, a well-trained dysmorphologist can “essentially walk into a baby’s room and make this diagnosis.” The more profound results are for patients with phenotypes that would be far more difficult to diagnose because of the subtlety of facial traits, or for helping non-dysmorphologists recognize these phenotypes.

In a March 2018 publication, Dr. Basel described as “quite daring,” researchers took four syndromes with inborn metabolic errors and mild facial feature coarsening, plus Nicolaides-Baraitser syndrome (which has a similar phenotype) to test the

“Just looking at pure facial imaging the software was able to accurately predict the specific disorder in 64 percent of the cases. If you added a single feature into that feature set, the diagnostic accuracy increased to 87 percent.”

This means clinicians using Face2Gene may be presented with syndrome recommendations that they might have otherwise disregarded.

Similarly to how clinicians gain expertise with practice, Face2Gene grows smarter as it sees more cases. “The more we use it, the better it gets,” Basel said.

This brought him back to his original question: if artificial intelligence technologies can grow to analyze more and more data of increasingly varied types, “Where are the limits?”

Donald Basel presents at PMWC